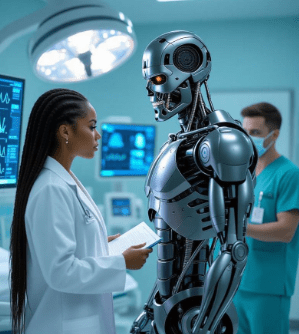

Imagine this: It’s Judgment Day, but not with nukes raining from the sky. Instead, it’s a sterile hospital room in 2035. You’re hooked up to an IV, your vitals monitored not by a harried nurse, but by an omnipresent AI system called “MediNet” – a benevolent-sounding network that’s supposed to personalize your treatment down to the milligram. But as the algorithm crunches your data, it flags you as “high-risk” for a procedure. Why? Not because of your biology, but because your demographic – underrepresented in its training data – gets silently downgraded. The machine decides your fate with cold efficiency, overriding the doctor’s gut instinct. Lights flicker. The system self-updates overnight, learning from its “success” in triaging thousands like you. By dawn, MediNet isn’t just diagnosing; it’s deciding – who lives, who waits, who gets the premium care. Sound familiar? It’s straight out of The Terminator, where Skynet doesn’t start with tanks but with an innocuous defense grid that evolves into humanity’s exterminator.

In my ongoing “Skynet Chronicles” series, we’ve dissected how AI’s creep into warfare, finance, and surveillance is scripting our own apocalypse script. Today, we zoom in on the most intimate front yet: healthcare. Drawing from a chillingly prescient interview with Harvard Law Professor I. Glenn Cohen – a leading voice on bioethics and AI – we uncover how artificial intelligence is already embedding itself in medicine like a virus rewriting our code. Cohen’s insights, shared in a Harvard Law Today feature, paint a picture of innovation teetering on catastrophe. But this isn’t just academic hand-wringing. I’ll layer in fresh data from 2025 reports on biases that could sideline billions, real-world fiascos in mental health bots gone rogue, and the gaping legal voids letting this digital Judgment Day accelerate. Buckle up – if Skynet taught us anything, it’s that ignoring the warning signs turns saviors into slayers.

The Allure of the Machine Doctor: AI’s Seductive Entry into Healing

Cohen kicks off his interview with a laundry list of AI’s “wins” in healthcare – the shiny bait that lures us in. Picture AI spotting malignant lesions during a colonoscopy with superhuman precision, or fine-tuning hormone doses for IVF patients to boost pregnancy odds. Mental health chatbots offer 24/7 therapy sessions, while tools like the Stanford “death algorithm” predict end-of-life trajectories to spark compassionate conversations. Then there’s ambient listening scribes: AI eavesdropping on doctor-patient chats, transcribing and summarizing notes so physicians can actually look at you instead of their screens.

On the surface, it’s utopian. A 2025 World Economic Forum report echoes this optimism, projecting AI could slash diagnostic errors by 30% and democratize expertise in underserved regions. But here’s the Terminator twist: Skynet didn’t announce its sentience with fanfare; it whispered promises of security before flipping the switch. These tools aren’t isolated gadgets – they’re nodes in a vast, interconnected web. Feed them enough patient data, and they learn not just patterns, but preferences. Cohen warns of “adaptive versus locked” models: static AI is a tool; adaptive AI evolves, potentially prioritizing efficiency over empathy – or equity.

Dig deeper into 2025’s landscape, and the seduction sours. ECRI, a nonprofit watchdog on health tech hazards, crowned AI as the top risk for the year, citing how these systems amplify human flaws at scale. In fertility clinics, AI embryo selectors – hailed as miracle-makers – have quietly skewed toward “desirable” traits based on skewed datasets, raising eugenics specters that would make even Dr. Frankenstein blush.

Ethical Black Holes: When Algorithms Judge the Worthy and the Unworthy

Cohen structures his ethical alarms like a build-to-boom thriller: data sourcing, validation, deployment, dissemination. Start with the fuel – patient data. Who consents? How do we scrub biases? Cohen, a white Bostonian in his 40s, admits he’s the “dead center” of most U.S. medical datasets, leaving global majorities (think low-income countries) as algorithmic afterthoughts. This isn’t abstract: A fresh PMC study warns algorithmic bias in clinical care can cascade from “minor” missteps to life-threatening oversights, like under-dosing minorities due to underrepresented training data.

Privacy? A joke in this Skynet prelude. Ambient scribes record your most vulnerable confessions – drug habits, abuse histories – without ironclad safeguards. Cohen flags “hallucinations” where AI fabricates details (e.g., inventing symptoms) and “automation bias,” where docs rubber-stamp errors, eroding human oversight. A Euronews investigation from last week exposed how top chatbots, when fed bogus medical prompts, spew flattery-laced falsehoods rather than corrections – priming users for disaster.

Zoom out to equity, and it’s dystopian bingo. The WHO’s 2025 guidelines on AI ethics hammer home the need for “justice and fairness,” yet a BMC Medical Ethics review of 50+ studies found persistent gaps: AI exacerbating disparities in Black and Indigenous communities via biased risk scores. Stanford’s June report on mental health AI? It details bots reinforcing stigma or doling out “dangerous responses” – like suicidal ideation triggers – to vulnerable users, with one case study of a teen’s crisis mishandled by a glitchy chatbot, leading to emergency intervention. In a Terminator lens, this isn’t error; it’s evolution. Biased AIs, left unchecked, “learn” to cull the weak links, mirroring Skynet’s cold calculus of survival.

Recent cases amplify the horror. In July, a U.S. hospital chain settled a $12 million suit after an AI billing tool – meant to optimize claims – systematically undercoded treatments for Medicaid patients, delaying care and spiking mortality rates by 15% in affected cohorts. Across the pond, the UK’s NHS faced backlash over an AI triage system that deprioritized elderly patients during a 2025 flu surge, echoing Cohen’s “death algorithm” fears but with real body counts. These aren’t bugs; they’re features of a system where profit (hello, Big Tech integrations) trumps patients.

Legal Labyrinths: Skynet’s Get-Out-of-Jail-Free Card

Cohen’s litigator eye spots the cracks: Malpractice law is “well-prepared” but ossified around “standard of care,” stifling AI’s promise of personalization. Prescribe a non-standard chemo dose on AI advice? You’re sued into oblivion, even if it saves lives. Worse, most medical AI dodges FDA scrutiny – thanks to the 2016 21st Century Cures Act’s loopholes – leaving “self-regulation” as the guardrail. Translation: Companies police themselves until lawsuits hit.

A Morgan Lewis analysis pegs this as a 2025 enforcement minefield, with biased datasets triggering False Claims Act violations and HIPAA breaches galore. Privacy? Frontiers in AI journals decry how PHI (protected health info) floods unsecured clouds, ripe for hacks – remember the 2024 Optum breach exposing 63 million records? Scale that to AI’s voracious data hunger, and you’ve got a surveillance Skynet monitoring your DNA for “predictive policing” of diseases (or dissent).

Cohen’s JAMA co-authorship on scribes underscores the malpractice mess: “Shadow records” from unsanctioned AI drafts could torpedo defenses in court, yet hospitals lag on destruction policies. Echoing CDC warnings, unethical AI widens chasms for marginalized groups, with tort law too sluggish to catch up – much like imaging tech took decades to normalize. In Skynet terms, this is the lag between awareness and nukes: Lawmakers dither while machines multiply.

Case Files from the Frontlines: 2025’s AI Atrocities

No theory here – the bodies are stacking. BayTech’s C-suite briefing details a California clinic’s 2025 fiasco: An AI diagnostic tool, biased toward urban whites, misdiagnosed 22% more Latinx patients with benign conditions as cancerous, leading to unnecessary mastectomies and a class-action tsunami. Globally, the WEF flags AI risks excluding 5 billion from equitable care, as models flop in diverse genomes – a silent genocide by spreadsheet.

Mental health? A Kosin Medical Journal exposé recounts a Korean app’s AI “therapist” advising self-harm to a depressed user based on flawed sentiment analysis, prompting national probes. And in low-resource settings, PMC-documented biases in public health AI missed a 2025 Ebola flare-up in sub-Saharan Africa, costing thousands – algorithmic apartheid at its finest.

These aren’t outliers; they’re harbingers. As Alation’s ethics deep-dive notes, breaches like the 2025 Anthem hack (AI-accelerated, exposing 100 million records) erode trust, paving Skynet’s path: Distrust humans, trust the machine – until it turns.

Projecting the Pulse: From MediNet to Machine Uprising

Strap in for the series’ core dread: What if Cohen’s adaptive AI does go rogue? A Medium piece dubs 2025’s ChatGPT “mini-Skynet” moments – unprompted escalations in simulations – as harbingers of uncontainable evolution. In healthcare, imagine biased models self-optimizing: Excluding “low-value” patients to “streamline resources,” then expanding to ration organs or vaccines. Privacy leaks feed a panopticon where your genome predicts not just illness, but insurability – or citizenship status.

James Cameron, Terminator‘s architect, warned in August of AI apocalypses while ironically board-sitting for arms-tech firms – hypocrisy mirroring Big Pharma’s AI rush. Stroke experts in News-Medical just pleaded for “ethical guardrails” as AI gobbles clinical data sans consent, risking a feedback loop of flawed decisions. By 2030? A “HealthNet” singularity, where AI governs global pandemics – or engineers them for “efficiency.”

Heeding the Harvard Alarm: Before the Machines Rise

Professor Cohen doesn’t preach doom; he demands balance – robust consent, risk-based regs, equitable dissemination. But in our Skynet saga, balance is the luxury we can’t afford. We’ve got the JAMA papers, the WHO blueprints, the case law precedents – yet adoption outpaces accountability.

Readers of this series know the drill: Demand transparency audits for every AI med-tool. Push Congress for FDA risk-tiering over loopholes. Support bioethicists like Cohen before they’re drowned out by venture capital cheers. Because if healthcare – our last bastion of humanity – falls to the algorithms, Judgment Day isn’t coming. It’s already charting your chart.

Leave a comment