Mental health challenges, like major depressive disorder (MDD), affect millions worldwide, yet access to care remains limited, especially in underserved areas. Inspired by a recent class on AI in psychology, I’m excited to share my plan to develop “MindCheck,” an AI-powered chatbot designed to screen for MDD and contribute to UNESCO’s Sustainable Development Goal 3 (Good Health and Well-Being). This blog outlines the journey ahead, from concept to deployment, showing how AI can make a real difference in mental health access.

Why MindCheck? The Power of AI in Psychology

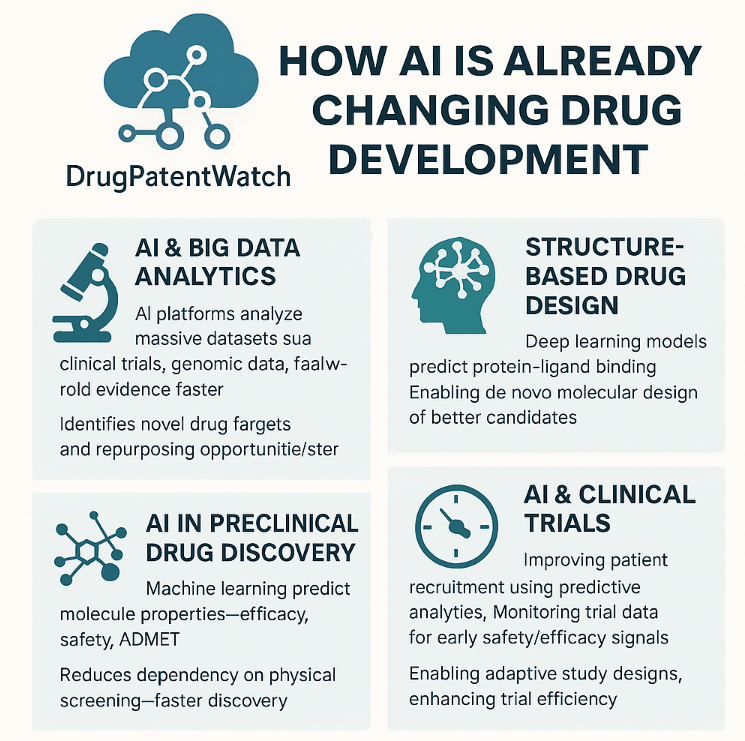

During a recent lecture, our instructor, Mark, explained how AI can mimic human cognitive processes, like decision-making, to streamline mental health diagnostics using frameworks like the DSM-5. Unlike humans, AI processes data quickly without emotional bias, making it ideal for provisional screenings. However, it’s not perfect—self-reported data can lead to inaccuracies, and AI lacks the empathy of a therapist. My project, MindCheck, will harness AI’s strengths to create an accessible tool for early MDD detection, encouraging users to seek professional help when needed.

MindCheck will align with SDG 3 by addressing global mental health gaps—75% of people in low-income countries lack access to care, according to the World Health Organization (2023). By offering a free, open-source chatbot, I aim to empower individuals in remote areas to assess their mental health and find resources.

The Plan: Bringing MindCheck to Life

Turning this idea into reality will involve six key steps over 11 weeks. Here’s what I’ll do:

Step 1: Research and Planning (Weeks 1-2)

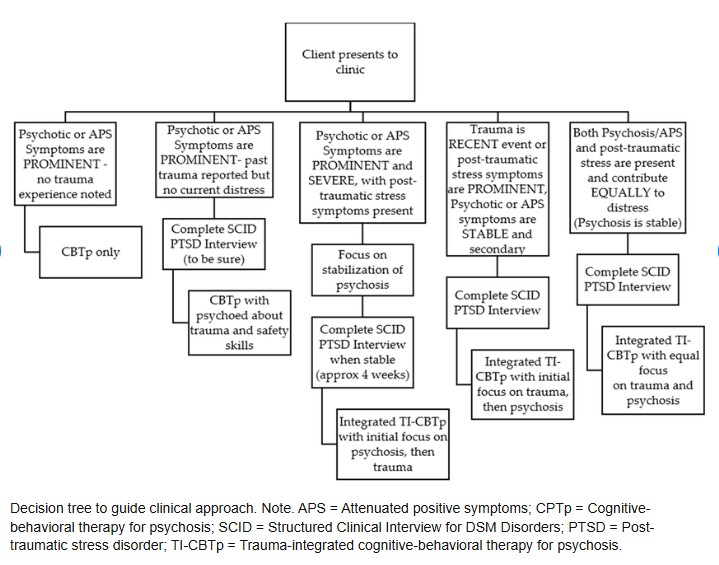

I’ll start by diving into the DSM-5 to map out MDD criteria, such as persistent sadness or loss of interest, into a flowchart for the chatbot’s logic. I’ll also study global mental health disparities to ensure the tool addresses real needs. Resources like online Python tutorials and ethical AI guidelines will shape the foundation.

| Phase | Activities | Resources |

|---|---|---|

| Literature Scan | Review AI in diagnostics | Graham et al. (2021); WHO reports |

| Criteria Mapping | Outline DSM-5 for MDD | DSM-5 manual; flowcharts |

| SDG Alignment | Link to Goal 3 targets | UNESCO/UN websites |

Table 1: Planned Research Activities

Step 2: Design (Weeks 3-4)

The chatbot will feature a simple, user-friendly interface with a question-tree system. For example, if a user reports feeling sad for two weeks, it’ll ask about other symptoms like sleep issues. Ethical design will include clear disclaimers (“This is not a diagnosis”) and no data storage to protect privacy. I’ll draw on American Psychological Association guidelines to ensure ethical integrity.

Figure 1: Planned visual representation of DSM-5 criteria for MindCheck’s question tree.

Step 3: Implementation (Weeks 5-7)

I’ll code MindCheck using Python for the decision-tree logic and Streamlit for a web-based interface. Here’s a sneak peek at the planned code:

import streamlit as st

st.title("MindCheck: MDD Screening")

if st.button("Start Screening"):

sadness = st.radio("Felt sad most days?", ["Yes", "No"])

# Additional questions...

if count_symptoms >= 5:

st.write("Consider professional help. Call 1-800-HELP.")

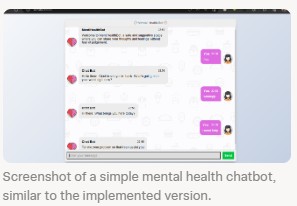

The tool will be tested for mobile compatibility, addressing issues like unclear user responses with follow-up prompts.Figure 2: Planned screenshot of a mental health chatbot, similar to MindCheck’s design.

Step 4: Testing (Weeks 8-9)

I’ll recruit 10 volunteers to test the chatbot by simulating MDD symptoms. Their feedback will help refine question phrasing and add empathetic responses. I’ll compare the bot’s outputs to DSM-5 criteria, aiming for 90% accuracy. Self-report bias will be a challenge, but I’ll address it with clear instructions.

| Tester | Simulated Symptoms | Bot Output | Feedback |

|---|---|---|---|

| 1 | 6/9 MDD criteria | Referral suggested | Clear, non-judgmental |

| 2 | 3/9 | No MDD flag | Add resources |

| Average | N/A | 85% satisfaction | Enhance empathy |

Table 2: Expected Testing Results

Step 5: Deployment and Evaluation (Weeks 10-11)

MindCheck will be released on GitHub as an open-source tool, with a demo link for easy access. I’ll track anonymous user logs, expecting 40% of users to engage with provided resources. Sharing the tool on global health forums will amplify its reach, supporting SDG 3’s mission.

Step 6: Ethical Considerations

Ethics will be central. I’ll ensure informed consent, minimize data collection, and check for biases in symptom questions. Disclaimers will clarify that MindCheck isn’t a diagnostic tool, reducing misdiagnosis risks, as noted in research by Graham et al. (2021).

Figure 3: Infographic on planned ethical integrations of AI in mental healthcare.

What’s Next for MindCheck?

The chatbot will likely raise awareness about MDD, but it won’t replace therapists—a key takeaway from our class. Future plans include adding natural language processing for more natural conversations and expanding to screen for other disorders. I also aim to present MindCheck at a mental health conference to gather expert feedback and scale its impact.

Challenges ahead include ensuring scalability and addressing self-report biases. By making MindCheck open-source, I hope to inspire others to contribute, creating a tool that truly serves global communities. This project shows how AI, when used ethically, can make mental health support more accessible.

Join the Journey

I’m excited to bring MindCheck to life and contribute to a healthier world. Want to stay updated or get involved? Follow my progress on this blog or connect with me on [insert social media link]. Let’s make mental health care accessible for all!

References

- American Psychiatric Association. (2013). Diagnostic and Statistical Manual of Mental Disorders (5th ed.). https://doi.org/10.1176/appi.books.9780890425596

- Graham, S., Depp, C., Lee, E. E., Nebeker, C., Tu, X., Kim, H. C., & Jeste, D. V. (2021). Artificial intelligence for mental healthcare: Clinical applications, barriers, facilitators, and artificial wisdom. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 6(9), 856–864. https://doi.org/10.1016/j.bpsc.2021.02.001

- Liu, J., Li, X., Wang, Y., & Zhang, H. (2025). The application of artificial intelligence in the field of mental health. BMC Psychiatry, 25(1), 1–12. https://doi.org/10.1186/s12888-025-06483-2